The era of straightforward automation has given way to the sophisticated, dynamic world of Agentic AI systems. Today’s artificial intelligence no longer merely follows scripts or performs repetitive tasks; instead, it autonomously analyzes data, makes real-time decisions, and continually learns from new information. This evolution from Robotic Process Automation (RPA) to autonomous Agentic AI marks a radical shift in operational strategies across industries.

Unlike conventional AI solutions, Agentic AI doesn’t just execute predefined rules; it proactively predicts scenarios, adapts strategies in real-time, and enhances operational efficiency at unprecedented levels. Yet, this autonomy introduces complex, dynamic vulnerabilities that traditional cybersecurity measures can’t effectively counteract. The speed at which AI autonomy is advancing far outpaces most organizations’ cybersecurity readiness, creating urgent strategic imperatives for IT leaders.

Unlike conventional AI solutions, Agentic AI doesn’t just execute predefined rules; it proactively predicts scenarios, adapts strategies in real-time, and enhances operational efficiency at unprecedented levels. Yet, this autonomy introduces complex, dynamic vulnerabilities that traditional cybersecurity measures can’t effectively counteract. The speed at which AI autonomy is advancing far outpaces most organizations’ cybersecurity readiness, creating urgent strategic imperatives for IT leaders.

Understanding Agentic AI’s Autonomous Power

Agentic AI systems leverage advanced machine learning algorithms, deep neural networks, and reinforcement learning models to independently evaluate vast datasets, identify patterns, and take actions without human intervention. By minimizing latency and optimizing decision-making, these systems revolutionize business operations, from predictive analytics and customer engagement to operational automation.

However, autonomy also means that AI systems are susceptible to manipulation without continuous oversight. Their dynamic nature and adaptability, while advantageous, expand the attack surface significantly, presenting fresh challenges in cybersecurity that demand comprehensive strategic responses.

The New Threat Landscape: AI vs. AI

The emergence of Agentic AI systems has created a radically different threat environment, one where artificial intelligence is both the defender and the attacker. Cybercriminals now leverage AI to launch increasingly sophisticated attacks, and the very autonomy that makes Agentic AI powerful also introduces novel vulnerabilities. The following four areas outline the key aspects of this evolving threat landscape:

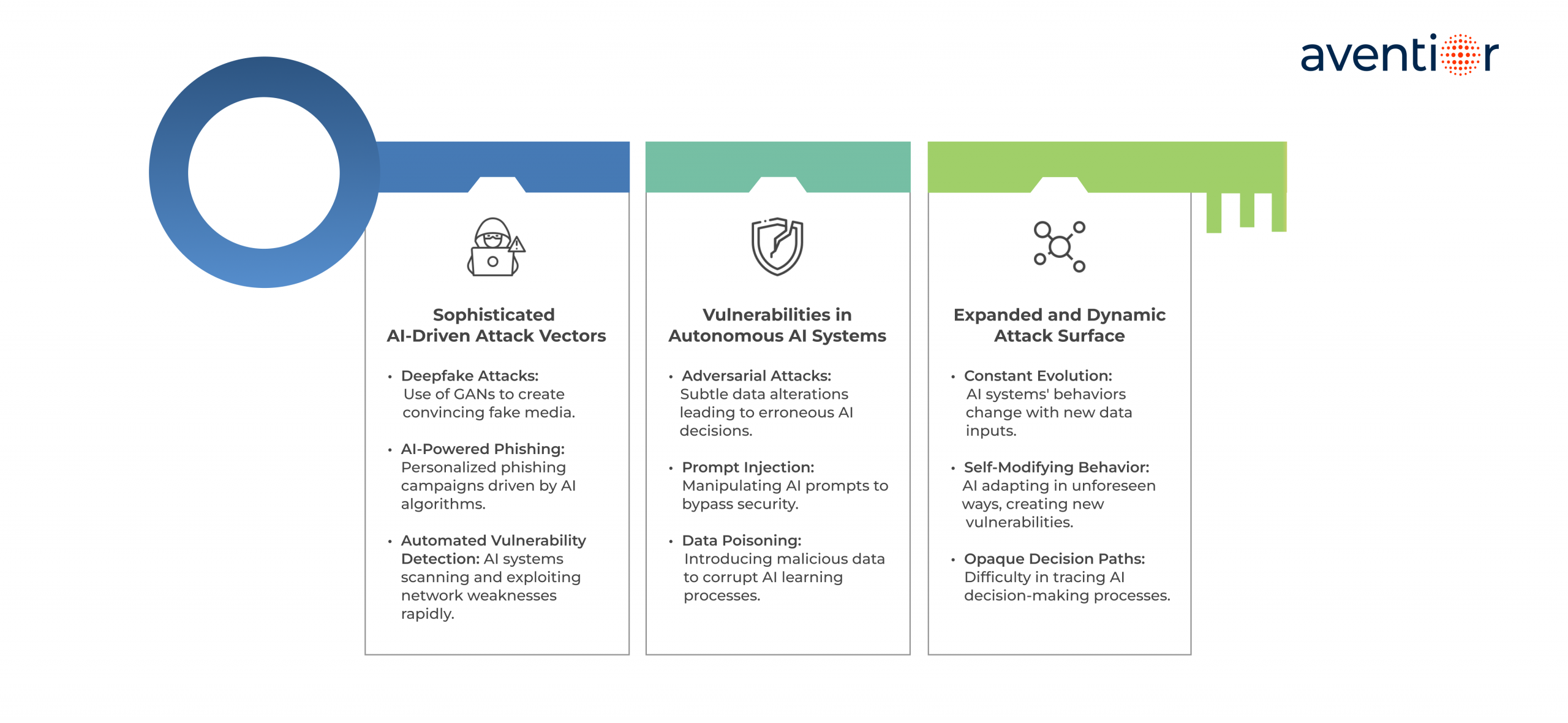

1. Sophisticated AI-Driven Attack Vectors

Cybercriminals are weaponizing AI to create hyper-realistic deepfake videos, voice clones, and personalized phishing campaigns that can fool even security-conscious employees. These AI-powered attacks are far more convincing and scalable than traditional methods, making them particularly dangerous for organizations unprepared for this new reality.

Cybercriminals now harness AI capabilities to craft hyper-realistic, highly convincing threats:

- Deepfake Attacks: Utilizing generative adversarial networks (GANs), attackers create authentic-looking fake videos or audio that impersonate executives, manipulate public opinion, or deceive employees into fraudulent activities.

- AI-Powered Phishing: Customized phishing attacks driven by AI algorithms predict and exploit individual vulnerabilities, significantly increasing the success rate of these targeted campaigns.

- Automated Vulnerability Detection: Malicious AI systems rapidly scan networks for weaknesses and execute automated attacks, reducing detection windows dramatically

2. Vulnerabilities in Autonomous AI Systems

The very autonomy that makes Agentic AI powerful also makes it vulnerable. These systems can be manipulated through:

- Adversarial Attacks: Slight alterations in input data designed to confuse or mislead AI systems, causing catastrophic decision-making errors.

- Prompt Injection: Exploiting how AI processes natural language prompts to bypass security controls or access unauthorized information.

- Data Poisoning: Corrupting datasets to subtly bias AI learning processes, resulting in erroneous decisions and system degradation over time.

3. Expanded and Dynamic Attack Surface

Agentic AI systems are inherently dynamic; they adapt, learn, and evolve continuously based on new data and interactions. This constant evolution means the system’s internal state and behavior can change in real time, resulting in a fluid and unpredictable attack surface.

Unlike traditional software, where vulnerabilities are relatively static and patchable, Agentic AI introduces a moving target for security teams. Each system update, new data source, or external interaction may introduce unforeseen risks. Traditional security tools are ill-equipped to handle this level of variability.

Key Risks:

- Constant Change: AI systems evolve continuously, making their behavior and vulnerabilities less predictable.

- Self-Modifying Behavior: Agentic AI adapts to its environment, which can lead to unintended attack vectors over time.

- Opaque Decision Paths: AI decision-making often functions as a “black box,” making threat analysis and root cause detection difficult.

- Traditional Security Gaps: Rule-based and perimeter-focused security tools are not designed to secure evolving, autonomous systems.

- Hidden Dependencies: AI models may rely on third-party data streams or APIs, which expand the attack surface through indirect vectors.

Strategic Imperative:

Organizations must adopt real-time, AI-driven cybersecurity systems capable of continuously learning and adapting alongside the AI systems they protect.

4. Regulatory and Compliance Challenges

As AI technologies evolve, so do the regulations that govern them. Around the world, lawmakers are introducing new frameworks to ensure that AI is used safely, ethically, and transparently. These regulations are especially focused on systems that operate autonomously or influence critical decisions.

The European Union’s AI Act is a prime example. It outlines strict obligations for high-risk AI applications, including requirements around transparency, data governance, and human oversight. In the United States, the NIST AI Risk Management Framework provides detailed guidance for assessing and managing risks throughout the AI lifecycle.

For organizations deploying advanced AI systems, these requirements are not optional. Falling short can result in serious consequences:

- Financial penalties for non-compliance

- Suspension of AI services or operations

- Loss of credibility and customer trust

- Legal exposure and potential litigation

Security and compliance must be built into the foundation of any AI initiative. It is no longer effective to treat them as separate efforts or address them late in the process. Leading organizations are integrating regulatory alignment into every stage of AI development, from planning to post-deployment monitoring.

Key Insight: Compliance must be embedded into the AI strategy from the beginning. Addressing it only after deployment increases risk, slows progress, and can damage the organization’s long-term resilience.

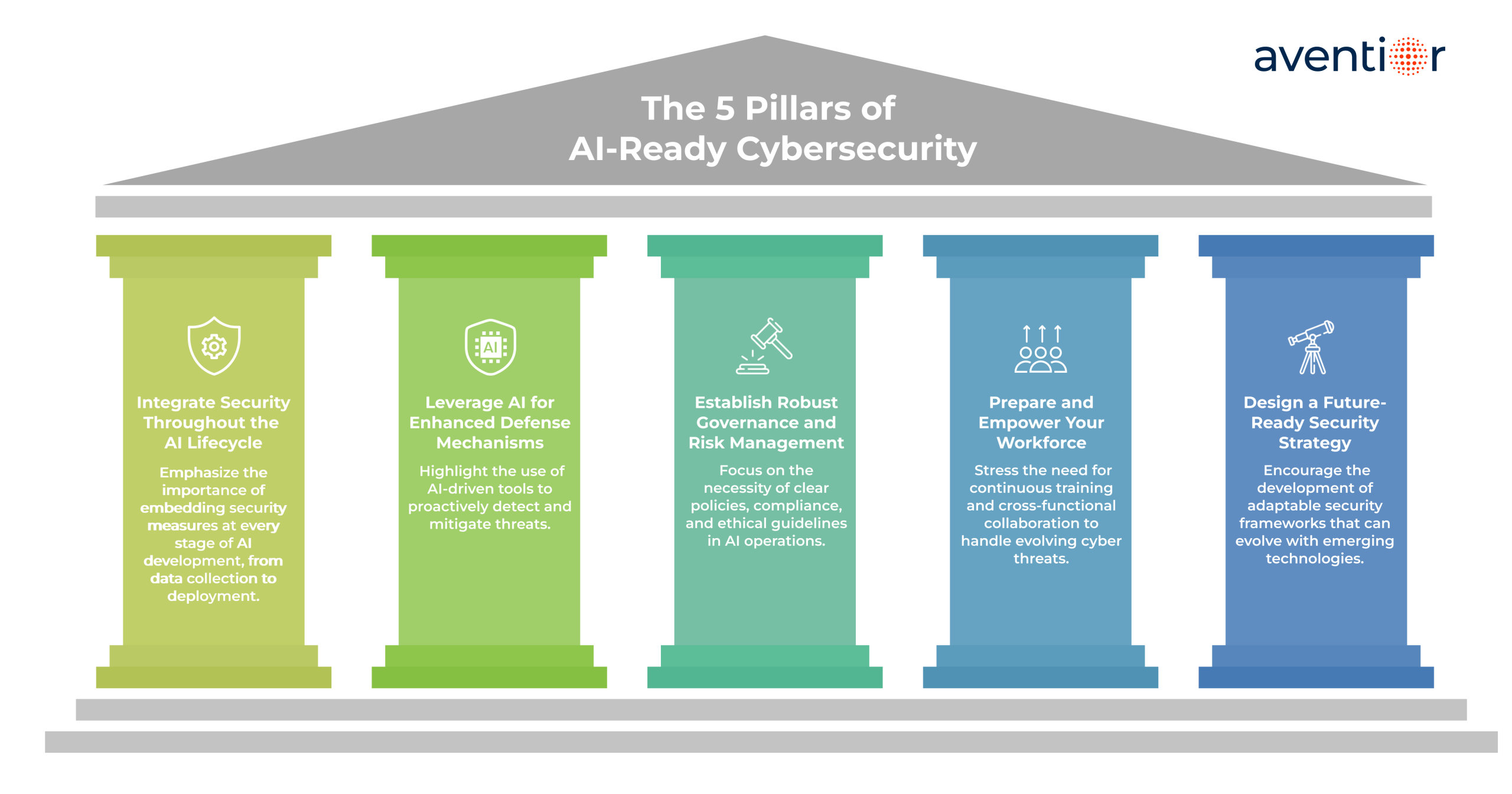

Building AI-Ready Cybersecurity: A Strategic Framework

As artificial intelligence becomes more embedded in our daily operations, the way we approach cybersecurity must evolve. Traditional security measures are no longer sufficient to protect the dynamic and autonomous nature of modern AI systems. To navigate this new landscape, organizations need a comprehensive strategy that addresses the unique challenges posed by AI. Here’s a breakdown of five essential pillars to guide your cybersecurity efforts in the age of intelligent automation.

1. Integrate Security Throughout the AI Lifecycle

Security isn’t a one-time setup; it’s an ongoing process that should be woven into every phase of your AI systems, from data collection to deployment and beyond.

- Data Integrity: Ensure that the data feeding your AI models is accurate and free from tampering. Implement validation checks and monitor for anomalies that could indicate data poisoning attempts.

- Model Training: Incorporate adversarial training techniques to make your AI models resilient against malicious inputs designed to deceive them.

- Deployment Oversight: Once deployed, continuously monitor your AI systems for unusual behaviors or unauthorized access attempts. This proactive approach helps in early detection of potential threats.

- Operational Resilience: Develop adaptive response mechanisms that allow your systems to quickly contain and recover from security breaches, minimizing potential damage.

2. Adopt AI-Powered Defense Systems

As cyber threats become more sophisticated, leveraging AI to bolster defense is not just beneficial, it’s essential.

- Real-Time Analytics: Utilize AI-driven platforms that can analyze vast datasets instantaneously, identifying subtle anomalies that might escape human detection.

- Predictive Threat Modeling: Implement AI systems capable of anticipating potential threats, allowing you to strengthen defenses before vulnerabilities are exploited.

- Automated Incident Response: Speed is crucial in mitigating cyber threats. Automated systems can execute response protocols swiftly, reducing the window of opportunity for attackers.

Organizations adopting AI-enabled Security Operations Centers (SOCs) consistently report fewer security incidents, minimized breach impacts, and significantly faster threat mitigation.

3. Establish Robust Governance and Risk Management

Effective governance ensures that your AI systems operate within defined ethical and regulatory boundaries.

- Technical Oversight: Routine audits, penetration testing, and independent evaluations to maintain security posture.

- Ethical Frameworks: Establishing transparency, accountability, and ethical guidelines for AI use, fostering trust among stakeholders.

- Compliance Management: Stay abreast of evolving regulations like the European Union’s AI Act and frameworks such as the NIST AI Risk Management Framework to ensure ongoing compliance.

- Risk Assessments: Continuously evaluate and mitigate risks associated with AI deployments, adapting your strategies as necessary.

A well-structured governance framework not only safeguards your organization but also builds confidence among clients and partners.

4. Prepare and Empower Your Workforce

The sophisticated nature of Agentic AI demands enhanced skills and cross-functional collaboration within cybersecurity teams:

- Upskilling Programs: Robust training that bridges cybersecurity, data science, AI development, and ethical considerations, equipping teams to address complex threats comprehensively.

- Cross-Disciplinary Collaboration: Encouraging seamless cooperation between IT, cybersecurity, compliance, business strategy, and operations teams, ensuring holistic security practices.

- Continuous Learning: Establishing structured programs for continual education, staying ahead of rapidly evolving technologies and emerging threats.

5. Design a Future-Ready Security Strategy

The Agentic AI revolution is still in its infancy. Over the next decade, proactive, predictive cybersecurity will become mandatory. Strategic leaders must transition from reactive defense to a comprehensive predictive risk management model that embraces:

- Predictive Analytics: Leveraging AI to anticipate threats, rather than merely responding to them after the fact.

- Agile Response Models: Developing flexible cybersecurity frameworks capable of adapting quickly to new, unforeseen threats.

- Integrated Security Ecosystems: Merging technology, human oversight, ethical governance, and predictive intelligence into a unified cybersecurity approach.

Conclusion: Securing Your AI-Driven Future

The rise of Agentic AI presents both unprecedented opportunities and complex cybersecurity challenges. As these intelligent systems become integral to business operations, it’s imperative for organizations to proactively adapt their security strategies. By embedding robust security measures throughout the AI lifecycle, leveraging AI-driven defense mechanisms, establishing comprehensive governance frameworks, and fostering a culture of continuous learning, businesses can position themselves to not only mitigate risks but also to thrive in this new era.

At Aventior, we are committed to guiding organizations through this evolving landscape. Our expertise lies in developing tailored cybersecurity frameworks that address the unique needs of AI-driven environments. If you’re ready to fortify your organization’s defenses and embrace the future of intelligent automation securely. Let’s work together to build a resilient and secure AI-powered future.

To know further details about our solution, do email us at info@aventior.com.