Ships and Planes carry most of the items around the globe. More than 11 billion tons of cargo are transported around the world each year. These include vehicles, chemical gases, and liquids, solid materials within containers, etc. When these modes of transport carry so much material, it becomes crucial to monitor and track their movement across the globe.

Satellite Image analysis provides a great solution to such problems. These image analysis not only help in tracking the given subject but also helps in monitoring the surroundings of the target. With ships detection, and combined with data of other ships routes and live status, (Data from other sources), many security features can be built. Pirate ships can be detected much before they get close to potential attack-prone ships, ships that are out of their designated trajectory can be detected. Also, search and rescue operations can be further improved in terms of their response time and location accuracy. With Airplanes detection, Anti Collision Systems can be made more efficient. Also, it provides proper statistics related to flights.

A huge number of applications can be built with the help of Artificial Intelligence and Machine Learning applied to satellite images. These Applications require large quantities of data, and with the recent availability of satellite images from many tech giants and government organizations, it has become an emerging field in the aerospace domain.

Rhammell on Kaggle provided a cleaned dataset of satellite imagery, separately for each of the categories, i.e. for Planes and Ships. These datasets contain images of the object located in the center. Also, few scenes are provided to test out the performance of the model on large satellite images.

Ships Detection:

The DataSet:

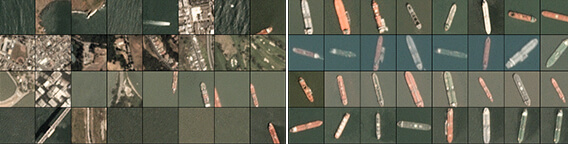

The latest version of the Ships dataset consists of 3600 images, with 80 X 80 image size in RGB format. Images were derived from PlanetScope full-frame visual scene products, which were ortho-rectified to 3-meter pixel size. Along with the images, the corresponding labels (1:Ship; 0:No Ship) and the geographical location of the image center point is provided in term of its latitude-longitude pair.

The following format is used in naming the images :

{label} _ {scene id} _ {longitude} _ {latitude}.png

The dataset consists of 900 images labeled as 1 (i.e. Ships) and 2700 images labeled as 0 (i.e. No Ship) The data is also available in .json format.

The following images show some examples of the images with no ship category. These include images that do not contain any ship or contain only some portion of a ship (i.e. not sufficient enough to be categorized as a ship).

Data Preparation:

As the dataset is comparatively smaller in size, we use image augmentation techniques to further add variants of the images. Here we use feature-wise centering and feature-wise normalization techniques. Also, Random horizontal and vertical flips and random rotation are added.

Machine Learning Model:

The model used here is an 8 layer deep Convolutional Neural Network, with three 2D Convolutional layers, two 2D Max-Pooling layers, two fully connected layers, and a dropout layer. The “ADAM” optimizer is used for the optimization of the weights and categorical cross-entropy is used as a loss function. The model is trained with a batch size of 128, for 50 epochs. The data is randomly shuffled of each epoch and split into an 80-20 ratio for training and validation. The final validation accuracy lies near 95%.

Output:

The trained model is used to locate ships in the satellite images. These scenes can be of varying sizes. The scenes are scanned with a sliding window technique, using a stride rate of 10 pixels, and a window size of 80 pixels. If a ship is detected in the window, a bounding box is drawn in red color, of the size of 80 X 80.

It can be seen that the Machine Learning model isn’t 100% accurate, it contains some False Positives ( i.e. some land structures are classified as ships ) as-well-as some False Negatives ( i.e. Some ships are not classified as ships, only one in this case ).

There is always a trade-off between False-Positives & False Negatives. These can be varied by further fine-tuning our model or the detection parameters. For example, further changing the detection window stride rate can change the errors. It depends on what is the end application of the algorithm, we can either optimize for sensitivity or specificity.

Planes Detection:

The DataSet:

The latest version of the Planes dataset consists of 32000 images, with 20X20 image size in RGB format. The dataset is labeled as 1:Plane, 0:No-Plane. It consists of 8000 images labeled as 1 (i.e. Plane) and 24000 images as 0 (i.e. No-Plane).

Also, The dataset is available in .json format.

Data preparation:

The data preparation tasks are the same as we used for the detection of ships. We use random horizontal and vertical flips, random rotation, Along with feature-wise centering and normalization.

Machine Learning Model:

As the task is the same as that of the ship’s detection problem, we use the same architecture of the neural network as used previously. We have 8-layers deep Convolutional Neural Network, with three 2D Convolutional layers, two 2D Max-Pooling layers, two fully connected layers, and a dropout layer. The “ADAM” optimizer is used for the optimization of the weights and categorical cross-entropy is used as a loss function.

The model is trained with a batch size of 128, for 50 epochs. The data is randomly shuffled of each epoch and split into an 80 – 20 ratios for training and validation. The final validation accuracy lies near 85% – 90%

Output:

The trained model is used to locate planes in the satellite images. The scenes are scanned with a sliding window technique, using a stride rate of 2 pixels, and a window size of 20 pixels. If a plane is detected in the window, a bounding box is drawn in red color, of the size of 20 X 20